Executive Summary

Ungoverned. That is the quiet failure mode behind most modern enterprise AI incidents. Systems don't collapse dramatically. They drift slowly. They produce outputs that sound correct, look statistically normal, and yet deviate from the world they are supposed to govern.

This is why traditional ML monitoring needs to be upgraded and updated. Accurate models still fail catastrophically when they drift across dimensions enterprises rarely measure:

- Emotional intent

- Context alignment

- Risk posture

- Semantic boundaries

- Temporal pattern relevance

To prevent these silent failures, AI must be governed not by static documentation but instead by a living architectural layer embedded directly into the intelligence stack.

In this article, we examine how drift-aware governance, runtime oversight, and continuous verification inside the Governance Layer form the foundation of Adaptive Intelligence Layers' approach to safe, aligned, enterprise-grade AI systems.

Introduction – Why Drift, Not Inaccuracy, Breaks AI

Artificial intelligence models adapt, absorb, and generalize in ways that are powerful, flexible, and inherently unstable. A system can be 99% accurate on paper and still generate catastrophic outcomes when it drifts away from:

- the environment it operates in

- the population it serves

- the risks it must mitigate

- the semantics it must interpret

These failures do not appear as crashes or obvious anomalies. What actually happens is that they appear as reasonable but dangerously incorrect outputs, the kind that slip past unmonitored systems precisely because they seem plausible.

This is where Adaptive Intelligence Layers™ begins:

Instead of asking the question: "How do we make models more accurate?"

We ask "How do we ensure intelligent systems remain aligned with reality?"

Accuracy is not governance, and Governance is not a PDF that simply states rules. Governance is a layer of an active, interpretable system that sits between intent, context, and execution.

The Drift Governance Layer exists to fill this architectural void.

Enterprises need a common yardstick for evaluating whether drift is harmless, concerning, or dangerous — especially as multi-model architectures, agentic systems, and runtime workflows introduce new forms of behavioral drift that statistical monitoring cannot detect.

This distinction is why AIL treats governance as a layered architecture rather than a post-hoc monitoring tool — enabling governance-as-code and real-time semantic risk routing before harm occurs.

1. The Drift Problem in Enterprise AI

Traditional MLOps frameworks talk about "data drift" and "model drift," but enterprises experience something far more nuanced and more dangerous.

In production, drift shows up as small shifts that accumulate into systemic misalignment:

- a customer-support assistant that subtly changes tone,

- a fraud model that becomes overly permissive during seasonal cycles,

- a triage system that misinterprets new symptom language,

- or a risk engine that starts normalizing borderline decisions.

Each of these looks statistically acceptable for a while because none of them looks like an outage. And yet collectively, they create exactly what boards and regulators fear most: undetected risk.

To govern AI in the real world, we need a vocabulary that goes beyond accuracy and error rates. We need to talk about operational drift.

2. Degrees of Drift Severity (A Practical Framework)

Enterprises don't just need to know if drift exists. They need to understand how serious it is and what should happen next.

Adaptive Intelligence Layers™ defines three practical levels of drift severity that combine statistical, semantic, and risk-based indicators into a single scale.

Low Drift (Level 1)

Low drift is minor, expected fluctuation. Patterns shift slightly, but operational meaning remains intact.

Signals:

- Small distribution changes

- No safety or compliance risk

- Stable semantics

- Healthy confidence intervals

AIL Response:

Continuous monitoring only. No governance escalation. The system simply watches and learns.

Moderate Drift (Level 2)

Moderate drift is the first sign of emerging misalignment.

Signals:

- Notable pattern shifts

- Early semantic compression between categories

- Context signals weakening

- Risk posture variability

AIL Response:

- Threshold checks

- Precautionary governance rules

- Human review for sensitive or high-impact tasks

Moderate drift is the yellow light—it's an indicator that the system may soon require active intervention.

High Drift (Level 3)

High drift is where systems become operationally unsafe.

Signals:

- Severe risk posture instability

- High ambiguity between categories that used to be clearly separated

- Safety-critical misclassifications become possible

- Real liability exposure emerges

AIL Response:

- Immediate override into Safe Mode

- Human-in-the-loop required

- Quant Vault incident logging

- Adaptation Layer prompts for retraining and recalibration

At this stage, drift is no longer a model-performance issue. It is a governance emergency.

3. Why Statistical Drift ≠ Operational Drift

Statistical drift measures shifts in the numbers: feature distributions, prediction frequencies, or error curves. It is useful, but incomplete.

What actually matters in enterprise environments is operational drift: the degree to which system behavior diverges from the real world and from the organization's risk appetite.

Examples of operational drift include:

- A customer service assistant that becomes curt or dismissive in tone (emotional drift)

- A fraud model that begins approving more borderline cases due to seasonal data patterns (risk drift)

- A medical triage system that misreads new or colloquial symptom language (context drift)

In each case, statistical monitoring might say "all is well" while the risk profile is quietly degrading.

Statistical drift explains how numbers move and operational drift explains how harm happens.

The Drift Governance Layer is explicitly designed to govern operational drift—the category of drift that most monitoring tools never see.

4. The Governance Layer – Two Pillars and the X-Point Process

The Governance Layer is the interpretive and decision-making backbone of Adaptive Intelligence Layers™. It is intentionally modular and implementation-agnostic, powerful enough to govern heterogeneous AI stacks without exposing sensitive internals.

It rests on two foundational pillars:

Pillar 1 – Interpretive Governance

Interpretive Governance evaluates what the model is doing in context.

It asks:

- Does this output reflect operational reality?

- Does it cross a safety or risk boundary?

- Does it violate a regulatory expectation?

- Does it require elevated oversight?

This pillar governs alignment, asking whether the system's internal reasoning still matches the world it operates in.

Pillar 2 – Directive Governance

Directive Governance determines what should happen next.

It decides:

- Should this be escalated?

- Should routing switch to a human operator?

- Should Safe Mode be engaged?

- Should this event be written into the Quant Vault?

- Should thresholds or policies be updated?

This pillar governs action, which ensures the right step is taken at the right time.

The Governance X-Point Process

Between these pillars, AIL evaluates each decision through a structured X-Point rule sequence whose length scales with system complexity:

- Simple stacks: ~6 rule evaluations

- Moderately complex stacks: 10–14 rules

- Large enterprise ecosystems: 20+ rules

Every evaluation results in:

- A governance interpretation

- A governance action

- An auditable record stored in the Quant Vault

Enterprises need assurance that every decision passed through a consistent governance process.

The Governance Layer works as a persistent runtime supervisor, continuously evaluating output behavior, contextual signals, and risk posture — a form of adaptive governance architecture designed for evolving, high-stakes systems.

5. Case Study – The Airport Emergency Misclassification

This scenario is a composite case study informed by real operational drift patterns observed across enterprise AI deployments.

It demonstrates how small deviations in context interpretation compound into operational risk, and how runtime governance prevents catastrophic misclassification even when a model appears 'healthy.'

During peak winter travel, a major international airport experienced a 41% surge in "lost luggage" submissions over four weeks. Unmonitored, the AI assistant absorbed this imbalance and slowly reshaped its classification boundaries.

By Week 5:

- Emergency triage accuracy had dropped from 94% to 71%

- Category boundary overlap between "medical emergency" and "lost item" had increased by 62%

- If risk posture indicators had been measured, they would have shown a clear deviation from baseline

Despite that, no anomaly alert fired. Statistically, the system looked fine.

Then an incident occurred.

A traveler submitted:

"My father collapsed near Gate 12. He isn't responding."

The drift-biased model misclassified it as:

Low-priority: misplaced personal item.

Emergency response was delayed by 11 minutes—the difference between rapid stabilization and critical deterioration.

The failure wasn't accuracy. Nor was it the model. It was governance—or rather the absence of it.

How AIL Would Have Changed the Outcome

With Adaptive Intelligence Layers™ in place, the incident trajectory looks very different:

- Week 2: Context drift is detected as "lost luggage" patterns begin to dominate. Governance alerts are issued.

- Week 3: Risk drift escalates from moderate to severe. High-risk classifications begin to require human review.

- Week 4: X-Point Governance rules activate Safe Mode for ambiguous alerts touching emergency semantics.

- Week 5: When the collapse message arrives, it is automatically routed to a high-sensitivity path with mandatory human oversight and cannot be down-ranked to "low priority."

In parallel:

- The Quant Vault stores a complete, tamper-evident audit trail of drift signals, governance decisions, and actions taken.

- The Adaptation Layer recalibrates thresholds for seasonal behavior, strengthening emergency detection for the rest of the travel period.

Instead of a near-catastrophic delay, the story ends in containment and learning. The system becomes more resilient rather than less.

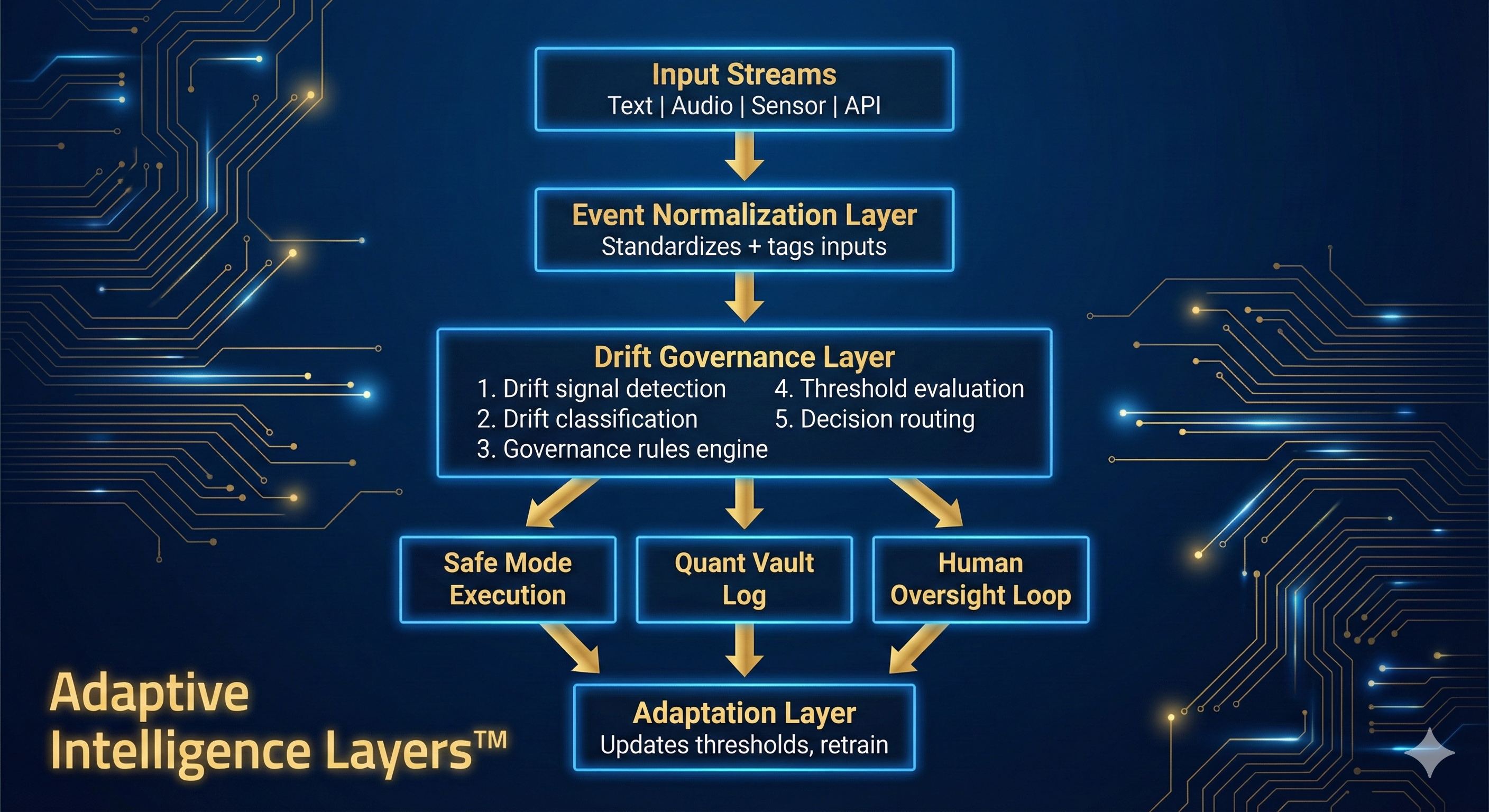

6. Architecture Diagram

Adaptive Intelligence Layers™ – Drift Governance architecture. Event normalization flows into the Governance Layer, which controls Safe Mode Execution, Quant Vault logging, Human Oversight, and the Adaptation Layer.

Conclusion

Ungoverned AI is not unsafe by malice; it is unsafe by drift. Systems rarely fail in a single, dramatic moment. They fail gradually, through the accumulation of small misalignments that go unseen until they cross a threshold.

The Drift Governance Layer turns governance into a living part of the stack—a layer that detects operational drift, interprets risk, enforces oversight, and preserves alignment across time.

This is how enterprises build AI they can trust, and how AI becomes safe without becoming static.

Adaptive Intelligence Layers™ is the architecture that will define the next era of intelligent systems.

Download this article

Save a PDF version for reference or sharing